LLMs have revolutionized the world by enabling advanced language processing capabilities.But they have also created new security risks, such as prompt injection, stealthy relay attacks, LLMjacking, and instructional jailbreaks. Released in 2025, experts in AI, cybersecurity, and cloud technology developed the OWASP Top 10 Risks for LLM and GenAI applications. The list identified several risks and selected the 10 most critical risks.

In this blog, we will thoroughly discuss the OWASP Top 10 LLM risks and explain how they can be prevented.

Let’s get started!

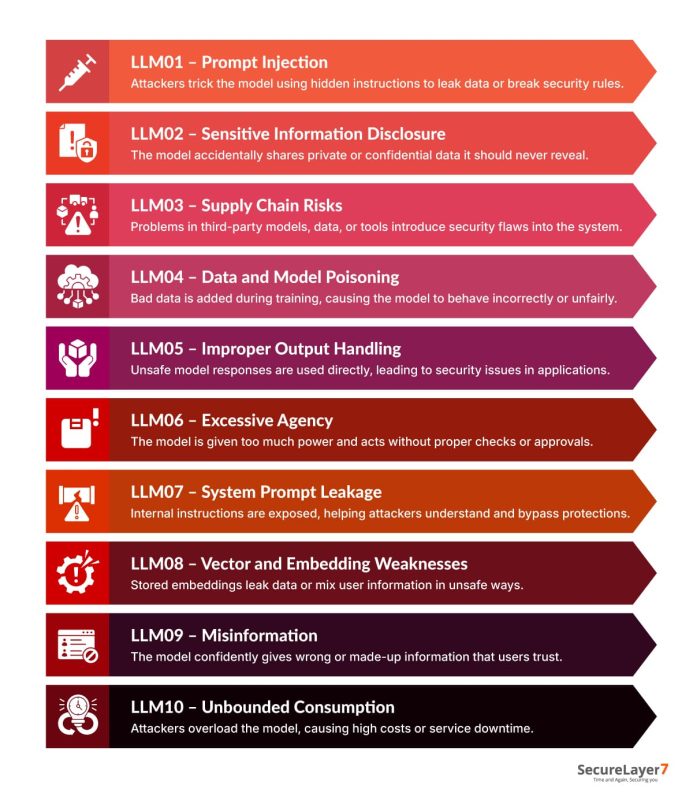

OWASP Top LLM Risks: What’s New in 2025?

The 2025 edition reflects how attacks have evolved in production environments. It adds new categories based on real deployment experience and emerging threats, while refining earlier entries to eliminate overlap and focus on the most significant risks.

Key changes compared to the 2023–2024 edition include the following:

- Prompt Injection stays at #1.

- New entries: Excessive Agency, System Prompt Leakage, Vector and Embedding Weaknesses, Misinformation, and Unbounded Consumption.

- Notable moves: Sensitive Information Disclosure jumps from #6 to #2; Supply Chain risks expand and climb from #5 to #3; Output Handling falls from #2 to #5.

- Broader coverage: Training Data Poisoning now includes Data and Model Poisoning.

- Streamlined structure: Insecure Plugin Design, Overreliance, Model Theft, and Model Denial of Service merged into existing categories.

OWASP Top 10 for Large Language Model (LLM) Applications

Below is the list of cardinal vulnerabilities for generative AI applications built on LLMs! Let’s dive deeper into each.

1. LLM01: Prompt Injections

Prompt injection poses significant security risks to large language model (LLM) applications by exploiting weaknesses in how inputs are processed and interpreted. These weaknesses can lead to serious consequences, including data leakage, unauthorized access, and broader security breaches. Understanding and addressing prompt injection is essential to maintaining the security and integrity of generative AI systems.

Common Prompt Injection Vulnerabilities in LLM Applications

LLM applications are vulnerable to several types of data leakage that can be triggered through prompt injection. These risks typically fall into the following categories:

- Manipulating LLMs:Carefully crafted prompts can trick the model into revealing sensitive information or bypassing built-in safeguards. Attackers exploit weaknesses in token handling, encoding, or prompt interpretation to extract confidential data or gain unauthorized access.

- Contextual Manipulation: By supplying misleading or deceptive context, attackers can steer the model into taking unintended actions. This may cause inaccurate, unsafe, or harmful outputs that influence user decisions or system behavior in ways the application did not intend.

How to Prevent Prompt Injections in LLM Applications

To enhance the security of LLM applications and mitigate prompt injection risks, the following measures should be implemented:

A. Limit Model Behavior

- Clearly define what the model is allowed to do and where its boundaries stop.

- Give it a clear role and keep instructions focused so it doesn’t wander into unsafe territory.

- Make it explicit that core rules cannot be changed, even if the user asks convincingly or creatively.

B. Define and Validate

- Tell the model exactly how responses should look, structure, tone, and level of detail.

- Use validation checks to confirm outputs follow those rules. This helps catch unexpected behavior early and reduces the chance of hidden or misleading responses slipping through unnoticed.

C. Implement Input and Output Filtering

- Identify what types of content are sensitive or risky and filter them before they reach the model or the user.

- Use semantic checks, pattern detection, and relevance checks to spot misuse.

- Review outputs for alignment with the original question and intended context.

D. Enforce Privilege Control and Least Access

- Give the system only the access it absolutely needs to function.

- Keep sensitive tools, credentials, and APIs outside the model’s reach and manage them through secure code. This limits damage if the model behaves unexpectedly or is manipulated.

Examples of Attack Scenarios

- Indirect Injection: A user asks a large language model to summarize a webpage, unaware it contains hidden instructions. The model follows those instructions instead of the user’s intent, inserting a malicious link that quietly sends private conversation data to an external source.

- Unintentional Injection: A company hides AI-detection instructions inside a job description. When an applicant uses an LLM to improve their resume, the model unknowingly triggers those hidden rules, flagging the application without the applicant realizing why it happened.

Organizations can enhance the security of their LLM applications and mitigate potential risks by implementing strong security measures, conducting frequent testing, and remaining vigilant against prompt injection vulnerabilities.

2. LLM02: Sensitive Information Disclosure

Data leakage is a critical concern for Language Models. The inadvertent or unauthorized disclosure of sensitive information can lead to severe consequences, including privacy breaches, compromised intellectual property, and regulatory non-compliance.

Common Sensitive Information Disclosure

These LLM vulnerabilities are called data leakage vulnerabilities. This risk includes the following vulnerabilities:

- Improper Handling of Sensitive Data: Inadequate protocols for handling sensitive information within the LLM application can cause unintended data exposure. This includes situations where sensitive data is inadvertently stored, transmitted in plain text, or stored in easily accessible storage locations.

- Insufficient Access Controls: Weak access controls can allow unauthorized users or entities to access sensitive data. Lack of proper authentication and authorization mechanisms increases the risk of data leakage and unauthorized data access.

- Insecure Data Transmission: Inadequately secured data transmission channels may expose sensitive information to interception and unauthorized access. Failure to utilize encryption protocols or secure communication channels can compromise data privacy during transit.

How to Prevent Sensitive Information Disclosure in LLM Applications

To mitigate the risks of data leakage in LLM applications, it is crucial to implement robust security measures. Consider the following prevention strategies:

A. Data Classification and Handling

- Implement a data classification scheme to identify and categorize sensitive information within the LLM application.

- Apply appropriate security controls, such as encryption and access restrictions, based on the classification level of the data.

B. Access Controls and User Authentication

- Implement strong access controls to ensure only authorized users have access to sensitive data.

- Employ robust user authentication mechanisms, including multi-factor authentication, to prevent unauthorized access and data leakage.

C. Secure Data Storage

- Apply industry-standard encryption techniques to safeguard sensitive data stored within the LLM application.

- Utilize secure storage mechanisms and ensure that access to stored data is restricted to authorized personnel only.

D. Secure Data Transmission

- Employ secure communication protocols, such as HTTPS, for data transmission between the LLM application and other systems or users.

- Encrypt sensitive data during transit to prevent interception and unauthorized access.

- Conduct regular security audits and vulnerability assessments to identify and address potential weaknesses in the LLM application’s data handling processes.

- Use a web application penetration testing checklist to assess the system’s resilience against data leakage attacks.

Common Examples of Sensitive Information Disclosure Scenarios

- Misconfigured Access Controls: A misconfigured access control mechanism allows unauthorized users to gain access to sensitive data within the LLM application. As a result, confidential information is exposed, leading to potential data leakage and privacy breaches.

- Insecure Data Transmission: Sensitive data is transmitted over an insecure channel, such as an unencrypted network connection. An attacker intercepts the data during transit, compromising its confidentiality and potentially leading to data leakage.

3. LLM03: Supply Chain

Supply chain risks associated with LLM vulnerabilities include security risks from compromised training data, models provided by third parties, libraries, APIs, and various deployment platforms. Such loopholes can cause biased and incorrect outputs, data breaches, or system failures if attackers gain unauthorized access to the LLM environment and tamper with external components.

Furthermore, the advent of open-access LLMs, such as LoRA and PEFT, and on-device deployments expands the attack surface.

Common Vulnerabilities and Risks

Several vulnerabilities and risks are associated with supply chains in GenAI applications, including:

- Data Poisoning: Hackers can inject malicious or biased data into LLM training datasets, resulting in unexpected model behaviour, incorrect outputs, or biased results.

- Model Backdoors: Threat actors may infiltrate the LLM ecosystem and embed hidden triggers or fine-tuned components, allowing unauthorized control or manipulation of the model during inference.

- Dependency Exploits: Hackers can exploit vulnerabilities in third-party libraries, APIs, or deployment platforms to gain unauthorized access or disrupt services.

How to Prevent Supply Chain Risks in LLM Applications

To prevent and mitigate the risks associated with inadequate sandboxing in LLM applications, the following measures should be considered:

A. Vet Data Sources and Suppliers Thoroughly

- Data from third-party sources and vendors can be risky. It is essential to evaluate these risks, including their terms and conditions and privacy policies.

- Always work with trusted suppliers and periodically audit their security posture.

B. Manage Third-Party Components Proactively

- Check whether they have implemented “OWASP Top 10 A06:2021 – Vulnerable and Outdated Components” mitigations, such as vulnerability scanning, patch management, and regular updates.

- Ensure these controls are in place in both production and development environments.

C. Conduct Rigorous Red Teaming and Model Evaluation

Always work with reliable and trustworthy red-teaming service providers to obtain quality output. They use standard best practices and continuously fine-tune their processes, practices, and methodologies to stay ahead of threat actors.

D. Maintain an Accurate Software Bill of Materials (SBOM)

Make it a policy to maintain an up-to-date and signed inventory of all software components, leveraging SBOM best practices. This helps proactively detect tampering and prepares you for incident response. You can start with OWASP CycloneDX.

Real-World Examples of Supply Chain Scenarios

- Unauthorized System Access: Inadequate sandboxing allows an attacker to exploit vulnerabilities within the LLM application and gain unauthorized access to the underlying system. This can lead to unauthorized data access, system manipulation, and potential compromise of critical resources.

- Data Exfiltration: Insufficient sandboxing enables an attacker to extract sensitive data from the LLM application or its connected systems. By bypassing containment measures, the attacker can exfiltrate valuable information, leading to data breaches and potential legal and reputational consequences.

- Establish Proper Isolation: Organizations can mitigate OWASP supply chain security risks associated with generative AI applications by establishing proper containment and isolation of the LLM environment to safeguard against unauthorized access, prevent data exfiltration, and protect application integrity.

4. LLM04: Data and Model Poisoning

Data poisoning poses a significant threat to LLM applications, potentially leading to compromised model performance, biased outputs, or malicious behaviour.

Training data poisoning refers to the deliberate manipulation or injection of malicious or biased data into the training process of a large language model. This can lead to the model learning undesirable behaviours, producing biased or harmful outputs, or becoming vulnerable to adversarial attacks. Training data poisoning can compromise the fairness, accuracy, and security of LLM applications.

Common Vulnerabilities and Risks

Several vulnerabilities and risks are associated with training data poisoning in LLM applications, including:

- Bias Amplification: Poisoned training data can introduce or amplify biases present in the data, leading to biased outputs or discriminatory behaviour by the LLM application.

- Malicious Behaviour Induction: Poisoned data can be strategically crafted to induce the LLM model to exhibit malicious behaviour, including generating harmful content, promoting misinformation, or manipulating user interactions.

- Adversarial Attacks: Adversaries can intentionally inject poisoned data to exploit vulnerabilities in the LLM model and bypass security measures, leading to data exfiltration, unauthorized access, or manipulation of system outputs.

How to Prevent Data Poisoning

To prevent training data poisoning and mitigate associated risks, the following measures should be implemented:

- Data Quality Assurance: Establish rigorous data quality assurance processes to ensure the integrity and reliability of training data, including validation, cleaning, and verification.

- Data Diversity and Representativeness: Ensure training data is diverse, representative, and free from explicit or implicit biases.

- Adversarial Data Detection: Employ techniques to detect and mitigate adversarial data injections during training, such as anomaly detection and statistical analysis.

- Regular Model Monitoring and Evaluation: Continuously monitor and evaluate model performance and behaviour to identify emerging issues or suspicious patterns.

Examples of Data Poisoning

- A training dataset contains biased samples that disproportionately favour one group, leading to biased classifications.

- An adversary injects poisoned data samples to manipulate the model’s behaviour and bypass security controls.

5. LLM05: Improper Output Handling

Improper Output Handling risks arise when LLM models are deployed without checking, cleaning, or managing content. Organizations may generate and use content without proper validation, effectively giving users indirect control over additional features.

Outputs may contain vulnerabilities exploitable by threat actors, causing serious security issues such as cross-site scripting (XSS), cross-site request forgery (CSRF), server-side request forgery (SSRF), and remote code execution.

Common Vulnerabilities and Risks

- Excessive Privileges Granted to LLM: Granting unnecessary privileges can lead to privilege escalation or remote code execution.

- Vulnerability to Indirect Prompt Injection Attacks: Indirect prompt injection allows attackers to manipulate inputs affecting other users’ environments, resulting in unauthorized access.

- Inadequate Input Validation in Third-Party Extensions: Poor input validation may allow unsafe outputs to propagate downstream, increasing exploitation risk.

How to Prevent Improper Output Handling in LLM Applications

To prevent and mitigate unauthorized code execution risks in generative AI-based LLM applications, consider the following measures:

A. Treat the Model as a Zero-Trust User

- Implement a zero-trust approach by treating the LLM like any untrusted user.

- Never trust its output without validation and authentication before consumption.

B. Apply Robust Input Validation and Sanitization: Use strict validation and sanitization of all inputs and outputs to minimize injection risks.

C. Encode Model Outputs for User Delivery: Encode LLM outputs before presenting them to users to prevent JavaScript or Markdown exploits.

Examples of Unauthorized Code Execution

- Extension Failure: An LLM extension passes output without validation, causing malfunction and maintenance issues.

- Data Exfiltration: A malicious prompt causes an LLM to capture and exfiltrate sensitive data to an attacker’s server.

6. LLM06: Excessive Agency

While designing LLM-based systems, developers may grant limited control for actions such as calling functions or using extensions based on user prompts. In some cases, these actions are decided by an LLM agent analyzing input or prior outputs.

Excessive agency becomes a vulnerability when systems autonomously take harmful actions due to unexpected or manipulated outputs.

Common Vulnerabilities and Risks

- Excessive Functionalities: Providing more capabilities than required increases misuse risk.

- Excessive Permissions: Unnecessary permissions enable unauthorized operations or privilege escalation.

- Excessive Autonomy: Autonomous actions without human oversight increase risk, especially under ambiguous prompts.

How to Prevent Excessive Agency Vulnerabilities in LLM Applications

A. Limit the Number of Extensions

- Treat every extension like new code entering your environment. If it doesn’t clearly add value, don’t allow it.

- Review extensions regularly and remove ones that are unused, outdated, or poorly maintained.

- Fewer extensions mean fewer things to monitor, patch, and worry about when something goes wrong.

B. Restrict Extension Functions

- Keep each extension focused on doing one job well instead of trying to do everything.

- Turn off features that aren’t actually used, especially those touching files, credentials, or external systems.

- Make sure extensions can’t quietly expand their behavior beyond what they were approved for.

C. Avoid Broad or Open-Ended Extensions

- Stay away from extensions that can run arbitrary commands or pull data from any URL without limits.

- Avoid tools that behave like “mini platforms” inside your environment.

- Prefer predictable, well-scoped functionality over flexible but risky capabilities.

D. Limit Extension Permissions

- Give extensions only the access they absolutely need to function—nothing more.

- Avoid blanket permissions; scope access to specific APIs, datasets, or actions.

- Recheck permissions after updates, since new versions often request more access than before.

E. Run Extensions with User Context

- Make sure extensions act on behalf of the logged-in user, not with elevated or shared privileges.

- Prevent background execution that bypasses normal access controls.

- Log actions clearly so it’s always possible to trace who triggered what and when.

Example of Excessive Agency Vulnerabilities Scenario

An AI-powered email assistant uses a full mailbox access extension. Malicious emails exploit indirect prompt injection, causing data exfiltration. Safeguards include read-only extensions, restricted OAuth scopes, and manual user approval before sending emails.

7. LLM07: System Prompt Leakage

System prompt leakage exposes sensitive information embedded in instructions guiding model behaviour. These prompts may unintentionally reveal credentials or access controls. Prompt secrets should not be treated as security controls.

Attackers can infer guardrails by observing responses. The true risk lies in exposed sensitive information, bypassed controls, or poor privilege separation.

Common Vulnerabilities and Risks

- Exposure of Sensitive Functionality: Prompts may disclose API keys, credentials, or system architecture.

- Exposure of Internal Rules: Internal logic disclosure enables attackers to bypass controls.

- Revealing Filtering Criteria: Attackers can craft inputs to bypass filters once rules are exposed.

How to Mitigate Overreliance on LLM-Generated Content

A. Human Validation and Critical Evaluation

- Treat AI output as a first draft, not a final answer. Someone with domain knowledge should always review it.

- Encourage teams to question responses instead of assuming correctness just because they sound confident.

- Build review checkpoints into workflows, especially for decisions that affect customers, money, or security.

B. Diverse Training Data

- Use models trained on broad, representative data so outputs don’t lean toward narrow or biased perspectives.

- Regularly evaluate whether the data reflects current realities, not outdated assumptions.

- When fine-tuning, include real-world edge cases to reduce blind spots and oversimplified answers.

C. User Education and Awareness

- Make sure users understand what the model can and cannot do, especially where it tends to guess or hallucinate.

- Train teams to treat AI as a support tool, not an authority.

- Share examples of common failure patterns so users learn to spot them early.

Example Scenarios of Overreliance

- Dissemination of Misinformation: When teams rely on LLM-generated content without checking it carefully, wrong information can slip through unnoticed. Once published, these mistakes spread fast and are hard to undo. Over time, this chips away at trust, hurts credibility, and can even create legal or compliance problems, especially in public-facing or regulated environments.

- Biased Decision-Making: Hiring systems trained on historical data often inherit the same biases found in previous decisions. If their outputs are accepted without review, certain candidates may be unfairly favored or rejected. This can quietly reinforce discrimination, expose organizations to legal risk, and undermine diversity efforts, even when there was no intent to be unfair.

8. LLM08: Vector and Embedding Weaknesses

Vulnerabilities in vectors and embeddings within Retrieval-Augmented Generation (RAG) can cause serious security risks. Weaknesses in generation, storage, or retrieval enable malicious content injection, output manipulation, or data leakage.

Common Vulnerabilities and Risks

- Unauthorized Access and Data Leakage: Poor access controls expose sensitive embedding data.

- Cross-Context Information Leaks: Multi-tenant vector databases may leak data between users.

- Embedding Inversion Attacks: Attackers reverse embeddings to recover sensitive source data.

How to Prevent Vector and Embedding Vulnerabilities

A. Access Control and Encryption

- Limit access to vector databases and embeddings strictly to users and services that genuinely need it.

- Use strong encryption for data stored in databases and during transmission between services to prevent interception.

- Separate access by role or tenant so one user’s data cannot be queried or inferred by another.

B. Data Anonymization and Validation

- Remove or mask personally identifiable and sensitive information before converting data into embeddings.

- Validate all data sources to ensure content is trusted, accurate, and free from hidden instructions or manipulation.

- Regularly review embedded data to catch outdated, corrupted, or poisoned inputs before they affect responses.

C. Monitoring and Secure Updates

- Track access logs and query patterns to quickly spot unusual behavior or unauthorized embedding access.

- Set alerts for sudden spikes in usage or abnormal retrieval activity that may indicate abuse.

- Apply security patches and model updates promptly to close known vulnerabilities and reduce exposure over time.

Example Scenario

An attacker embeds hidden instructions inside a resume that gets indexed into a vector database. When the hiring system retrieves it during candidate evaluation, the model follows those instructions, manipulating rankings or outputs without the recruiters realizing the system has been influenced.

9. LLM09: Misinformation

Misinformation occurs when LLMs generate believable but false content, causing security, legal, or reputational risks. Hallucinations arise from statistical pattern completion rather than factual understanding.

Common Vulnerabilities and Risks

- Factual Inaccuracies: LLMs can present incorrect information as fact, leading users to make wrong decisions. In business, legal, or medical contexts, even small inaccuracies can create serious operational, financial, or compliance risks.

- Unsupported Claims: Models may generate confident statements without evidence, especially in specialized domains. When trusted blindly, these fabricated claims can cause legal exposure, patient harm, or flawed policy and business decisions.

- Misrepresentation of Expertise: LLMs often speak with confidence regardless of certainty. This false authority can mislead users into trusting advice beyond the model’s actual knowledge, reducing critical thinking and increasing the risk of poor judgment.

How to Prevent Misinformation

A. Retrieval-Augmented Generation (RAG)

- Pulls information from trusted, up-to-date sources instead of relying only on the model’s memory, reducing made-up answers.

- Grounds responses in real documents, policies, or databases, which improves accuracy in legal, medical, and technical use cases.

- Makes outputs easier to audit, since answers can be traced back to the sources used during generation.

B. Model Fine-Tuning

- Tailors the model to your domain, reducing generic or misleading responses that don’t fit real-world contexts.

- Helps correct recurring errors or biases by reinforcing preferred patterns and discouraging unsafe behavior.

- Improves consistency across responses, making outputs more reliable for repeated or high-stakes usage.

C. Cross-Verification and Human Oversight

- Ensures critical decisions are reviewed by subject-matter experts before being acted upon or published.

- Catches subtle errors, bias, or hallucinations that automated checks often miss.

- Builds accountability and trust by keeping humans responsible for final outcomes, not the model.

Example Scenario

A company rolls out a medical chatbot without fully checking whether it gives safe or reliable advice. Over time, the chatbot starts sharing incorrect information, and some users end up getting hurt because they trusted it. The fallout is serious: legal action, loss of trust, and reputational damage. There was no attacker behind this. The problem came from relying too heavily on an AI system that wasn’t properly reviewed or supervised. It’s a clear example of how real harm can happen even when no one is trying to break the system.

Let’s understand this with a real world example:

In 2024, Air Canada ran into trouble after its website chatbot gave a passenger wrong information about bereavement fares. Travelers relied on advice, paid more than necessary, and later challenged the airline.

A Canadian tribunal ruled that Air Canada was responsible for what its chatbot said, rejecting the idea that the bot was “separate” from the company. The case shows how small AI mistakes can quickly turn into real legal and trust issues.

10. LLM10: Unbounded Consumption

Unbounded consumption allows excessive inference, enabling denial-of-service, financial loss, model theft, or performance degradation.

Common Vulnerabilities and Risks

- Denial of Service (DoS):An attacker doesn’t need to exploit a vulnerability to disrupt the service. By overwhelming the model with high-volume or compute-intensive requests, they can degrade performance or trigger outages. This directly impacts availability for customer-facing and business-critical workloads.

- Denial of Wallet: In this scenario, the service continues to function, but costs escalate quietly. Automated or abusive usage exploits usage-based pricing models, driving up inference and infrastructure spend. The financial impact often appears before operational issues are detected.

- Resource Exhaustion: Certain inputs are designed to consume disproportionate compute and memory. Sustained over time, this degrades performance across the platform, increases latency, and stresses supporting infrastructure. Left unchecked, it undermines reliability and erodes confidence in the system.

How to Prevent Unbounded Consumption Risk

Unbounded consumption risk can be prevented using following best practices:

A. Input Validation

- Enforce strict limits on input size, depth, and structure to prevent oversized prompts that strain compute resources.

- Block malformed, recursive, or intentionally complex inputs designed to trigger worst-case model behavior.

- Validate inputs early in the request pipeline so harmful prompts are rejected before reaching the model.

B. Limit Exposure of Logits and Logprobs

- Avoid exposing token probabilities or confidence scores unless absolutely required for legitimate use cases.

- Restrict access to these values to internal systems, not end users or external APIs.

- Reducing this visibility makes it harder for attackers to reverse-engineer model behavior or extract sensitive signals.

B. Rate Limiting

- Apply per-user and per-IP request limits to prevent abusive traffic from overwhelming the system.

- Use dynamic throttling to slow requests when unusual usage patterns or spikes are detected.

- Combine rate limits with authentication to ensure fair resource usage and protect availability for legitimate users.

Example of Unbounded Consumption Risks

- Unchecked Input Size: An attacker sends extremely large chunks of text to an LLM-powered system. The model tries to process it all, consuming too much memory and CPU in the process. This can slow the service down or even cause it to crash, affecting everyone else who’s trying to use it.

- Request Flooding: An attacker repeatedly sends a high number of requests to the LLM API in a short time. The system gets overwhelmed handling the load, which can degrade performance or make the service unavailable for legitimate users.

Why OWASP LLM Risks Are Different

GenAI systems operate fundamentally differently from traditional applications, creating unique security challenges. Instead of fixed code paths, they interpret natural language instructions at runtime, meaning attackers can manipulate behavior through carefully crafted text alone.

These large language models are probabilistic rather than deterministic. They can hallucinate, produce inconsistent outputs, and generate plausible-sounding errors that are hard to detect.

Unlike traditional apps with predictable resource usage, GenAI systems are vulnerable to expensive prompt attacks that can drain budgets through cascading API calls and recursive operations. The OWASP GenAI Top 10 provides the structured framework needed to identify and mitigate these risks that don’t fit conventional security models.

Final Thoughts

The OWASP Top 10 Risks for LLM Applications 2026 provides an updated, forward-looking assessment of GenAI security risks, empowering developers and security teams to build resilient GenAI systems. As LLMs become integral to applications, understanding these risks is critical.

Concerned about the OWASP Top LLM Security Risks in your GenAI applications? SecureLayer7 can help organizations identify, test, and mitigate real-world LLM threats through structured red-teaming and security validation. Contact Us to get started.

Reference sources:

Augment LLMs with RAGs or Fine-Tuning | Microsoft Learn

[2304.09655] How Secure is Code Generated by ChatGPT?

Slack AI data exfiltration from private channels via indirect prompt injection

Plugin Vulnerabilities: Visit a Website and Have Your Source Code Stolen · Embrace The Red

FAQs on OWASP Top 10 LLM Security Risks

LLM vulnerabilities stem from behavioural manipulation rather than code flaws, unlike traditional issues such as SQLi or XSS.

Governance focuses on predictability, safety, accountability, and data lineage.

Layered defenses, monitoring, access controls, prompt validation, isolation zones, and regular red-team testing are most effective.