A Comparative Analysis: The Top 8 API Security Testing Tools

March 27, 2024

Red Team Assessments: A Complete Guide

April 5, 2024

Large Language Models (LLMs) have revolutionized Natural Language Processing tasks, offering capabilities such as translation, text generation, summarization, and conversational AI. However, along with their benefits, LLMs also present significant security challenges. Here’s a deep dive into everything you need to know about LLM security, including its components, importance, challenges, and strategies for implementation.

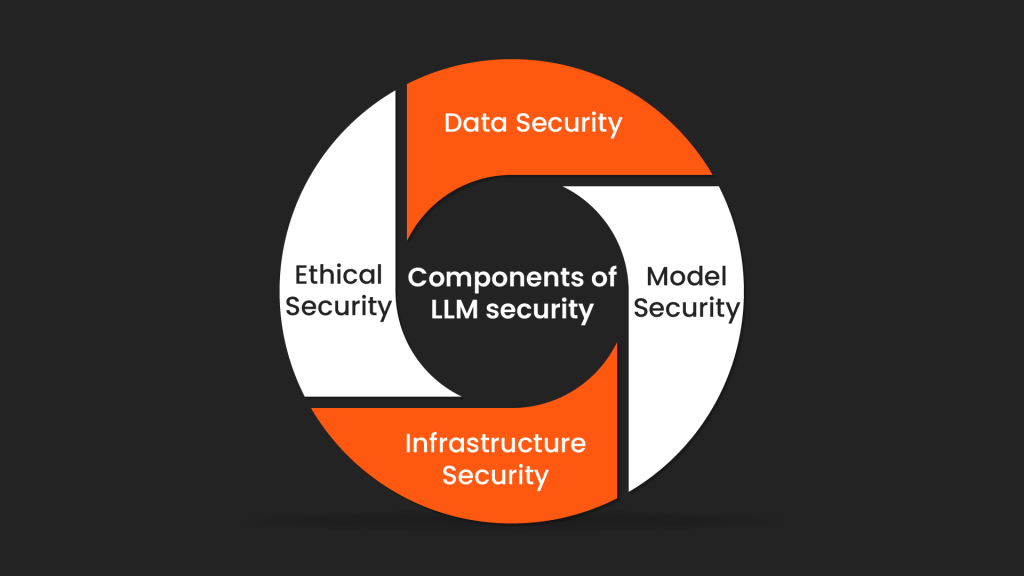

Components of LLM Security

Here’s an overview of the components of LLM security.

Data Security

Securing the training data used to train Large Language Models (LLMs) is of utmost importance in maintaining the integrity and reliability of the model’s outputs. Without proper data security measures, malicious actors could exploit vulnerabilities in the training data to manipulate the model, leading to the generation of misleading or harmful information. To address this concern, organizations employ various techniques such as data provenance and secure anonymization.

Data provenance involves tracking the origin and history of training data, ensuring transparency and accountability in the dataset’s creation process. By maintaining a clear record of how data is collected, processed, and used, organizations can identify and address any potential issues or biases present in the training data.

Secure anonymization techniques are utilized to protect sensitive information within the training data while still preserving its utility for model training. This involves removing or obfuscating personally identifiable information (PII) and other sensitive data elements to prevent unauthorized access or misuse.

Model Security

Protecting the LLM model itself from adversarial attacks is essential to maintain its reliability and trustworthiness. Adversarial attacks are malicious attempts to manipulate the model’s inputs or outputs, leading to unintended results or compromising its performance. To mitigate these risks, organizations employ techniques such as adversarial training and secure model architectures.

Adversarial training involves exposing the model to adversarial examples during the training process, allowing it to learn robust representations that are resistant to manipulation. By incorporating adversarial examples into the training data, the model can better recognize and defend against potential attacks in real-world scenarios.

Secure model architectures refer to the design and implementation of LLMs with built-in security features to protect against various attack vectors. This includes measures such as input validation, output verification, and model monitoring to detect and prevent unauthorized access or tampering.

Infrastructure Security

Securing the underlying infrastructure that supports LLM deployment is critical to safeguarding against unauthorized access and data breaches. This involves implementing access control and network security measures to protect sensitive data and prevent malicious actors from compromising system integrity.

Access control mechanisms regulate user access to LLM resources, ensuring that only authorized individuals or entities can interact with the model or its associated data. This may involve user authentication, role-based access control (RBAC), and fine-grained permissions to enforce security policies and prevent unauthorized activities.

Network security measures, such as firewalls, intrusion detection systems (IDS), and secure communication channels, are deployed to monitor and control network traffic to and from the LLM infrastructure. This helps detect and mitigate potential threats, including malware, denial-of-service (DoS) attacks, and unauthorized data exfiltration.

Ethical Security

Addressing the ethical implications of LLM outputs is crucial to ensure responsible and trustworthy use of the technology. LLMs have the potential to generate biased or harmful outputs that perpetuate stereotypes, misinformation, or discriminatory behavior. To mitigate these risks, organizations employ techniques such as bias detection algorithms and human review processes.

Bias detection algorithms are used to identify and quantify biases present in LLM outputs, allowing organizations to assess and mitigate their impact. By analyzing patterns and trends in the model’s behavior, bias detection algorithms can highlight areas of concern and guide corrective actions to promote fairness and equity.

Human review processes involve human evaluators manually inspecting LLM outputs to identify and correct any biases or errors. This human-in-the-loop approach ensures that subjective judgments and contextual nuances are considered when assessing the quality and appropriateness of LLM-generated content. By combining automated bias detection algorithms with human review processes, organizations can effectively address ethical concerns and uphold high standards of integrity and accountability in LLM deployment.

Importance of LLM Security

Let’s now dive into why LLM security is crucial.

Protecting Sensitive Information

In today’s digital landscape, the protection of sensitive information is paramount. Large Language Models (LLMs) often handle data that is confidential or personal, such as medical records, financial transactions, or private communications.

Ensuring data security within LLMs is crucial to prevent unauthorized access and potential breaches that could compromise user privacy. A robust LLM security system employs encryption, access controls, and secure data handling practices to safeguard sensitive information from unauthorized access or exposure.

Safeguarding Financial Interests

The use of LLMs in financial applications introduces unique security challenges. Any breach in the security of these models could have severe financial consequences, leading to monetary losses for individuals and institutions alike. By implementing stringent security measures, organizations can mitigate the risk of attackers manipulating LLM outputs for financial gain.

This includes measures such as secure authentication, encryption of financial data, and real-time monitoring for suspicious activity to ensure the integrity of financial transactions and protect against fraud.

Ensuring Model Reliability

The reliability of LLM outputs is crucial, especially in applications where accuracy is paramount, such as scientific research or medical diagnosis. Security threats like data poisoning, where malicious actors manipulate training data to distort model outputs, pose significant risks to the reliability of LLMs.

To mitigate such threats, robust security measures must be implemented throughout the model’s lifecycle. This includes rigorous validation of training data, continuous monitoring for anomalies, and prompt response to any detected security breaches. By ensuring the reliability of LLM outputs, organizations can maintain trust in the integrity of their applications and prevent potential harm caused by inaccuracies or manipulations.

Challenges to LLM Security

Despite the numerous advantages offered by LLMs, ensuring their security presents various challenges. This section explores some of the key challenges encountered in maintaining the security of LLMs.

Identifying Security Threats

The landscape of LLM security threats is dynamic and continuously evolving. This dynamic nature makes it difficult to detect and anticipate potential attacks effectively. Threat actors may exploit vulnerabilities in the model’s architecture or manipulate training data to achieve their malicious objectives.

Identifying these threats requires a deep understanding of the intricacies of LLMs, including their architecture, training data, and potential attack vectors. However, due to the complexity of LLMs, this task can be challenging and often requires specialized expertise.

Risk Assessments

Traditional risk assessment methodologies may fall short in adequately addressing the unique security risks associated with LLMs. As a relatively new technology, our understanding of the potential security implications of LLM deployment is still evolving.

Traditional risk assessment frameworks may not fully capture these emerging threats, leaving organizations vulnerable to unforeseen security breaches. Consequently, there is a need for tailored risk assessment approaches that take into account the specific characteristics and vulnerabilities of LLMs.

Implications of Risks

The implications of security risks on LLMs can be far-reaching, affecting not only the functionality of the models but also their reputation and societal trust. Security breaches in LLMs can lead to various adverse consequences, including data manipulation, theft of sensitive information, and accidental disclosure of internal data.

These implications can undermine the reliability and integrity of LLM outputs, eroding user confidence and potentially causing significant harm. Therefore, it is essential for organizations to proactively address security risks to mitigate their potential impact on LLMs and their stakeholders.

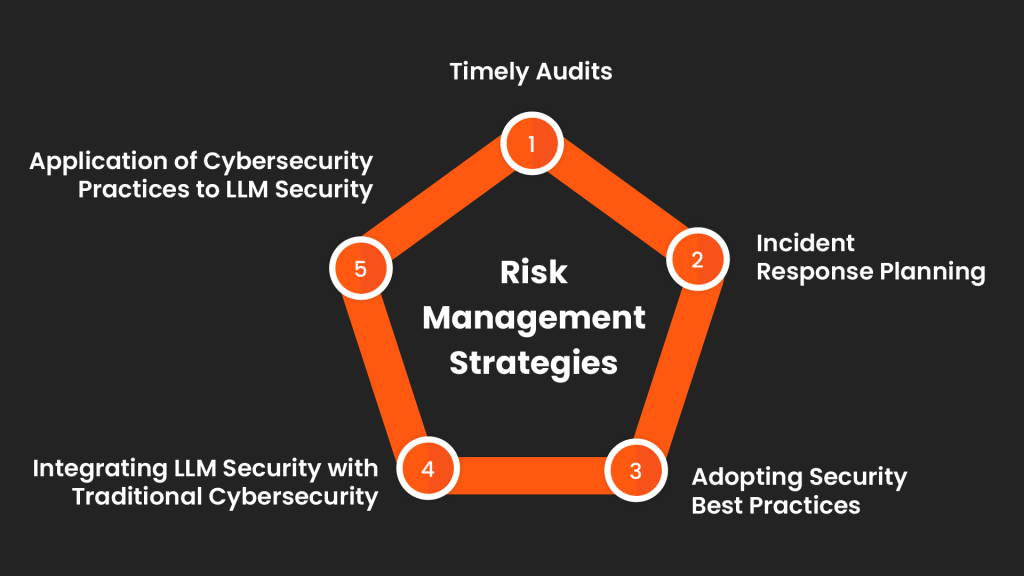

Risk Management Strategies

Here are some best practices to help with LLM risk management.

Timely Audits

Regular security audits of the LLM, training data, and underlying infrastructure help identify vulnerabilities before they can be exploited.

Incident Response Planning

A well-defined incident response plan ensures a swift and coordinated response to security breaches, mitigating damage, and restoring normal operations.

Adopting Security Best Practices

Implementing best security practices across all stages of the LLM lifecycle is essential, including secure data handling practices, robust access controls, and ongoing monitoring for suspicious activity.

Integrating LLM Security with Traditional Cybersecurity

Combining traditional cybersecurity methods with LLM security measures provides comprehensive protection. Penetration testing and vulnerability assessments help identify and address vulnerabilities in both LLMs and traditional IT systems.

Application of Cybersecurity Practices to LLM Security

Established cybersecurity practices such as secure coding principles, machine learning detection and response, and security orchestration automation can be directly applied to LLM security.

Best Practices for LLM Security Deployment

Ensuring the security of Large Language Models (LLMs) is critical to their effective and responsible use. This section outlines some of the best practices organizations can adopt when deploying LLMs to mitigate security risks and safeguard sensitive information.

Constant Monitoring

Continuous monitoring of LLM behavior is essential to identify and respond to security threats in real-time. By monitoring LLM outputs, organizations can detect anomalies, track for biases, and identify any unauthorized access attempts. This proactive approach enables prompt intervention and mitigation of potential security breaches, ensuring the integrity and reliability of LLM operations.

Training and Awareness

Raising awareness of LLM security threats among stakeholders is crucial for effective security management. Providing comprehensive training on secure LLM usage helps users understand potential risks and adopt best practices to mitigate them. By educating stakeholders on security protocols and risk mitigation strategies, organizations can enhance the overall security posture of their LLM deployments.

Using LLMs Ethically

Ethical considerations are paramount in the deployment of LLMs to ensure fair and unbiased outcomes. Implementing bias detection algorithms and human review processes helps mitigate biases within the model and its outputs.

By proactively addressing biases, organizations can promote fairness, transparency, and accountability in LLM usage, fostering trust among users and stakeholders.

OWASP LLM Detection Checklist

The OWASP Top 10 for Large Language Models offers a comprehensive checklist for addressing LLM security concerns. This checklist covers various areas and tasks to protect organizations as they develop and deploy LLM strategies.

By following the OWASP guidelines, organizations can systematically address security vulnerabilities and ensure the secure and responsible deployment of LLMs.

Conclusion

Implementing top-notch security measures for LLMs is complex but crucial. We at SecureLayer7 understand this, and offer comprehensive services to make the process easier. Our expertise extends to threat assessments,vulnerability testing, monitoring and incident response planning.

Contact us today to learn more about these and other penetration testing services that have made SecureLayer7 a globally preferred cyber-security services provider.