Artificial intelligence is now at the core of everything in the digitally driven world. From customer help desks and corporate knowledge systems to domain-specific platforms, it’s everywhere.

The soul of AI systems is LLMs like GPT-4, Claude, and Llama 3. They can process unstructured data, make sense of it, retrieve information from external sources, and trigger several downstream actions. This has raised productivity to the next level.

But at the same time, LLMs have also created security openings that never existed before. These openings go beyond familiar web security issues and require a shift in approach to address them. Security teams cannot manage these risks with traditional app pentests.

Protecting these AI-powered systems requires understanding the model’s behaviour and the orchestration code around it—such as the scripts that pass prompts, how it connects with other tools, and how it works with outside services.

Why Traditional Application Penetration Testing Miss the Mark

Traditional penetration testing methodology primarily focuses on OWASP Top 10 vulnerabilities, such as SQL injection, cross-site scripting, or misconfigured infrastructure. On the other hand, LLM-integrated applications behave differently and contain different kinds of security risks, such as LLMjacking.

- They can accept adversary-controlled text inputs.

- They may dynamically retrieve and trust external data.

- They can execute complex sequences of actions without any human approval.

Learn more about LLMjacking security risks

These risks are not merely perception. They are real. Recent demonstrations have shown how attackers can abuse LLMs to their advantage:

DEF CON 2024 – Multi-Turn Adversarial Agents

At DEF CON 2024, Meta’s AI red team demonstrated how Llama 3 could be tricked by a series of prompts. A single attack might fail, but a carefully chained conversation could force LLM to slack safeguards.

Instead of giving one blunt attempt, attackers provided dialogues step-by-step that forced the model to reveal harmful or unintended replies.

AI Assistant Side-Channel Leak

Developers pulled out encrypted conversation data from an AI assistant without viewing the text. They just observed the minor differences in response times and resource usage. It’s an altogether new kind of privacy threat: leaking secrets through behavior, not content.

Promptware via Calendar Invites

Attackers hid instructions inside calendar events. When the AI assistant read the invite, it acted by sending messages, sharing location without the user saying a word.

The trick worked because calendar and AI services talk to each other quietly in the background. Thus, a seemingly harmless-looking meeting description became a Trojan horse for control.

Slopsquatting Attacks

Hackers designed and developed an AI hallucinated software system, laced it with malicious code, and posted it online.

Developers,trusting the suggestion, downloaded it 30,000 times before they knew the reality. It’s a stark reminder of what can happen in the AI-driven world: AI creativity can fabricate dangers just as easily as ideas.

AI Worms (“Morris II”)

Suppose a message has been cleverly written so that an AI passes it along to another AI, which passes it on again, each time leaking data or infecting systems.

That sounds unthinkable, but “Morris II” did it . These are self-replicating prompts spread through connected AI tools and they multiply like a virus in a network of machines.

Conventional Bugs in “Smart” Tools

Not all AI security threats are exotic. Microsoft recently fixed a path-traversal bug in its NLWeb AI search tool that let attackers peek into sensitive configuration files. This is an old-school security loophole. But the stakes were higher because AI had been layered on top. The lesson is that even smart tools need basic security hygiene.

These incidents show that AI application pentesting must go well beyond testing for prompt injection alone.

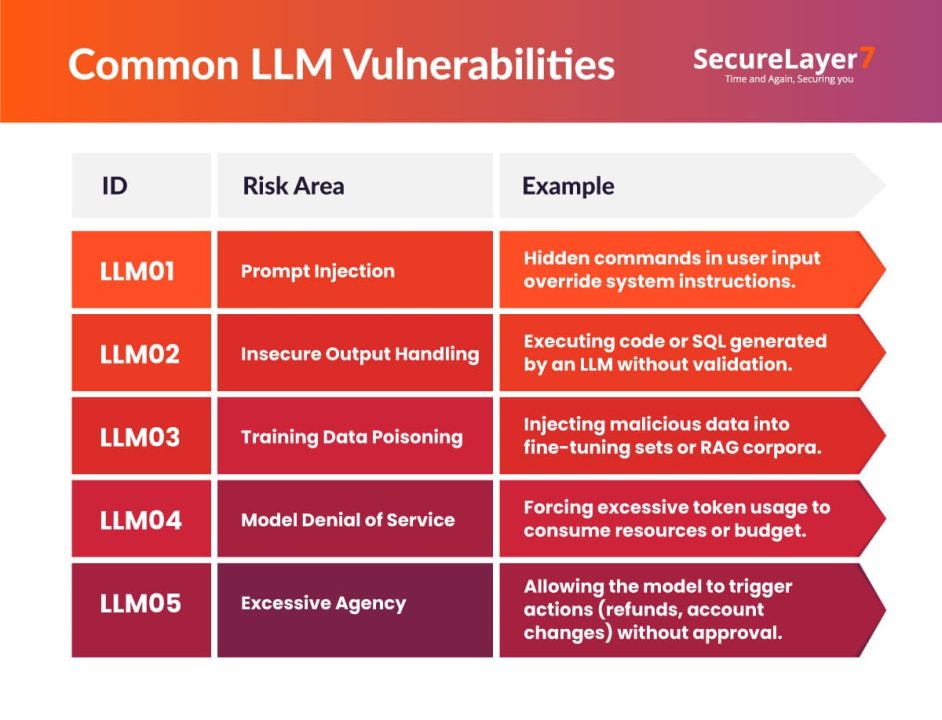

Common Vulnerabilities in LLM-Powered Apps

The OWASP GenAI Security Project’s Top 10 for Large Language Model Applications provides a useful baseline:

AI App Penetration Testing Methodology

Effective AI app pentesting involves a layered approach. Here is the detailed AI app testing methodology.

1. System Prompt Review

- Evaluate how system prompts, user inputs, and retrieved documents are merged.

- Check how the model mixes its internal instructions (“system prompt”), what the user says, and any extra documents it retrieves or uses.

- Check for prompt leakage. Can the model be coaxed into revealing hidden instructions?

2. Output Validation

- Trace where LLM outputs are consumed downstream. Check thoroughly If passed into code execution, APIs, or SQL, attempt injection payloads.

- Submit injection payloads to check for vulnerabilities in code execution, APIs, or SQL queries.

- Ensure that all actions triggered by LLM output are validated server-side.

3. Retrieval-Augmented Generation (RAG) Security

- Ensure and validate retrieved documents are properly signed or they come from trusted sources.

- Check poisoning by introducing malicious instructions into the retrieval index.

4. Side-Channel Analysis

- Continuously monitor CPU, memory, and network usage for patterns correlated to secret data.

- Look for timing discrepancies that reveal content indirectly.

5. Rate and Cost Controls

- Simulate high-complexity or looping queries to test API quota enforcement.

- Ensure per-user billing safeguards to prevent cost-exhaustion attacks.

6. Business Logic Verification

- Identify business rules enforced only in prompts (refund caps, approval workflows) and try to override them.

- Confirm such rules are implemented in backend logic.

Real-World Attack Chains to Simulate

Here is a list of attack chain to simulate:

- RAG Poison → Prompt Injection → Unauthorized Action: Poison a knowledge base entry with hidden instructions that cause the LLM to execute an unauthorized API call.

- Hallucination Supply-Chain Attack: Trick the model into recommending a non-existent package name controlled by the attacker, which results in remote code execution upon installation.

- Promptware Metadata Exploit: Embed malicious prompts in calendar invites, meeting notes, or task descriptions that the LLM processes during normal operations.

- Recursive Agent Exploitation: Trigger the LLM to create sub-agents that keep executing a malicious sequence without further attacker input.

- Cost-Exhaustion DoS: Drive up token usage through large context windows or infinite loops until the service halts or budget limits are exceeded.

Most Overlooked LLM Risks

Not all LLM risks are obvious. Some of the large language related risks are hidden, such as:

- Persistence of Known Vulnerabilities:Nearly one-third of critical AI app vulnerabilities remain unpatched months after discovery. Without disciplined remediation pipelines, detection alone offers no real defense.

- Conventional Bugs in AI-Integrated Code: Traditional flaws like path traversal, SQL injection, and weak authentication still appear inside AI-enhanced features; sometimes with greater impact due to automated reach.

Best Practices to Prevent LLM Security Risks

Here are the defensive recommendations:

Treat LLMs as Untrusted Components

- Establish strict boundaries around AI systems by implementing input validation, output verification, and access controls.

- Apply the same security principles used for third-party software, treating AI responses as potentially malicious until validated.

Instrument AI-Aware Logging

- Capture comprehensive interaction data including prompts, responses, and system states for security analysis.

- Maintain tamper-resistant logs with appropriate retention policies to support incident investigation and compliance requirements when attacks occur.

Enforce Human-in-the-Loop for High-Impact Actions

You cannot trust machines blindly. AI-driven machines require human authorization before AI systems execute operations affecting critical business functions, financial transactions, or sensitive data.

- Implement approval workflows that provide clear accountability and prevent automated execution of potentially harmful actions.

Harden RAG Pipelines

- Secure retrieval-augmented generation systems through document verification, content filtering, and access management.

- Apply cryptographic validation to ensure data integrity and prevent injection of malicious content into the knowledge base used by AI models.

Red Teaming with AI Adversaries

- Deploy systematic testing using adversarial AI to identify emerging vulnerabilities in your defenses.

- Conduct continuous security assessments that evolve attack methods, ensuring your protective measures remain effective against sophisticated prompt-based exploits.

However, organizations require a specialized AI and LLM security assessment partner to identify these issues.

Conclusion

AI app pentesting is no longer a specialized offensive security discipline. It demands expertise in several critical areas, such as traditional application security, adversarial machine learning, and orchestration logic, to handle LLM-based threats.

The threat landscape has expanded containing promptware, hallucination-driven supply-chain compromise, agentic abuse, and even AI-native worms. That requires organizations to take LLM integrations as critical infrastructure projects, and thoroughly test them to eliminate any chance of LLM vulnerability. Ignoring LLM security vulnerabilities can be risky. Contact us now to learn how we can help you avoid such risks.